Photo: Central Sussex College (CC BY-NC-ND)

Why Quality Metrics is a really bad idea

Liz Hill tells NPOs, ‘just say no’ to a fundamentally flawed scheme that will reveal more about the nature of the audience than the quality of an arts organisation’s artistic work.

Let’s put this out there right at the start. I think it is nothing short of fantasy to suggest that standardised metrics and aggregated data can deliver a form of benchmarking that will give Arts Council England (ACE) an overview of the quality of the work its funds.

Related: Arts Council earmarks £2.7m for Quality Metrics roll-out

I speak from experience. In 2003 I was the lead consultant on an exploratory research project that the Scottish Arts Council (SAC) ran to test – and I quote from the brief – “a new system for gathering audience opinions of arts activity in Scotland” which would “track audience opinions consistently over time and be recognised by Core Funded Organisations (CFOs) as representing the views of their audiences”. It came about because Scotland’s CFOs were unhappy with the system of peer review being used to assess the quality of their work, but it was rapidly abandoned when the drawbacks of the approach became apparent.

A quantitative approach

A standardised questionnaire was drawn up in collaboration with SAC and its clients, with some adaptations to create versions suitable for the performing arts, visual arts, festivals and participatory work. It was piloted by 11 arts organisations. Average scores were used as benchmarks against which to review the findings from each individual organisation.

Not surprisingly, the findings and feedback from the participating organisations bore a remarkable resemblance to those reported 13 years later in the independent evaluation of the Quality Metrics pilot. For example:

- Participating organisations found the data collection process hugely onerous, which led to small and unrepresentative samples, which undermined the data analysis

- Public evaluations of the artistic work were overwhelmingly positive, casting doubt over the ability of a numeric scale to capture any valuable critical feedback

- The quantitative approach was rejected as completely irrelevant by workshop leaders delivering participatory work, and in any case the number of participants taking part in each event was too small to produce useful findings

- Individuals on the margins of society and those whose first language was not English had to be helped to complete the questionnaires.

A tale of two cities

But perhaps the most interesting finding of all was revealed when a company took a production on tour to two different locations in Scotland: same company; same production; same cast; same everything – except venue.

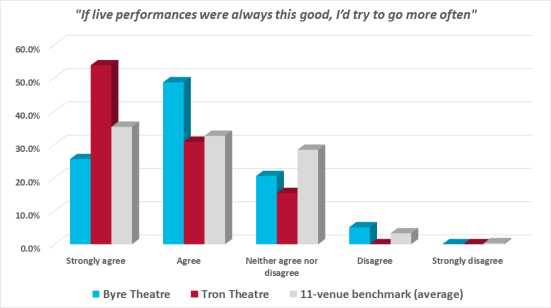

The two venues were Glasgow’s Tron Theatre and the Byre Theatre in St Andrews. Audiences at the Tron loved it, and responded very positively to the statement: “If live performances were always this good, I’d try to go more often.” The opposite was true of audiences at the Byre. We were scratching our heads as to why there was such a difference between the two, until we read the free text comments section on the questionnaires: the St Andrews audience objected strongly to the swearing in the play.

This incident provides a graphic illustration of the fundamental weakness of the whole Quality Metrics approach: it tells you a lot about the nature of the audience, but virtually nothing about the quality of the artistic work.

In the case of the two Scottish venues, had there been no opportunity for respondents to give a free-text response, it would have been all too easy to conclude that the company had had an ‘off day’ in St Andrews. In the absence of context, the potential for misinterpreting data is endless.

Fundamental weaknesses

Setting aside the insurmountable logistical challenges that will face some of the organisations having to conduct the fieldwork for the Quality Metrics scheme, there are two fatal flaws with the research framework that will render the findings meaningless.

#1 Validity

Technically speaking, research is described as ‘valid’ if it measures what it is supposed to measure – in this case, the quality of artistic work. Most of the Quality Metrics fall down in this regard:

Metric number three is probably the worst offender:

- Distinctiveness: it was different from things I’ve experienced before

Audience responses to this will depend entirely on what the respondent has experienced before, and how they define ‘different’. Vivaldi’s Four Seasons might be a very ‘different’ experience for a first-time orchestral attender whose art form of choice is thrash metal, but not very different at all for someone who likes to attend popular orchestral classics and listens to Classic FM.

And what exactly makes an experience ‘different’? Is it the performers that are being compared? The artistic programme? Costumes? Choreography? Musicians? Set? Audience? Venue?

Or will some people describe something as ‘different’ simply because it’s better – or worse – than what they’ve experienced before? Are we supposed to assume that ‘different’ is always good? If not, then comparing the responses from two or more organisations – or even two or more events by the same organisation – becomes a nonsense.

Another serious offender is:

- Captivation: it was absorbing and held my attention

These fatal flaws mean it will deliver not only meaningless, but also misleading figures

We’re all interested in different things. I happen to find sub-titled Scandi crime dramas completely absorbing, whereas for much of the UK population, subtitles are a complete switch off – literally. I suspect the same is true of Shakespeare, opera, contemporary art and jazz. If you’re really into it, it’s captivating; if you aren’t, it isn’t.

#2 Sampling

It’s not just the Metrics themselves that will frustrate ACE’s ambitions for its Quality Metrics scheme. As the Scottish pilot project discovered, it is completely unrealistic to expect arts organisations to be capable of, and willing to, survey a truly representative sample of their audience.

Sampling isn’t an art – it’s a science rooted in statistics. Sampling error arises when it is done badly. Too small a sample of respondents and it isn’t possible to conclude statistically – the whole point of quantitative analysis – that their views represent the audience as a whole.

And it’s not just about the size of the sample. Statistical confidence in research findings is undermined by non-random samples, such as self-selecting respondents, or those selected by a researcher on the basis of who they can persuade to fill in a questionnaire. These respondents can be completely unrepresentative of the audience as a whole.

For all its intentions of policing the system, ACE should not underestimate the guile of the arts community when it comes to manipulating the sampling to prove their artistic quality (and protect their funding). If I were an NPO, I’d be segmenting my database and sending post-show questionnaires to my most loyal bookers. And if I were a touring company taking a sexually explicit play to both Glasgow and St Andrews, I know where I’d be choosing to conduct the survey.

What next?

Aside from the colossal waste of public money, my main beef with the Quality Metrics scheme is that these fatal flaws mean it will deliver not only meaningless, but also misleading figures that will be used to justify the quality – or otherwise – of artistic work.

Call me Cassandra if you like, but make no mistake, this will be abandoned. Arts organisations will quickly realise that this is a time consuming and costly process that generates no useful information that they can act upon. It could be useful, perhaps, as a source of selective ‘evidence’ that can be used to dress up a funding application – but I promise there are easier and cheaper ways of doing that.

ACE will quickly realise that the flawed methodology won’t permit any meaningful assessment of quality, and that quality comparisons between organisations – even organisations presenting the same art form – simply can’t be justified. I’d love to be a fly on the wall in a conversation between ACE and an arts organisation, if ACE were ever to try and justify withholding funding based on the findings from Quality Metrics.

And, of course, the DCMS (where statistical analysis is properly understood – see their impressive Taking Part figures) will know that the methodology being used for data collection breaks so many rules in the quantitative research book, and will never accept the Quality Metrics findings as solid evidence of the ‘value’ of ACE’s investment in culture.

Time for a rethink

ACE needs to rethink – preferably before it wastes a huge swathe of public money on this fruitless scheme from which no one will benefit but the service provider.

That’s not to say that quantitative analysis has no place in quality measurement. It can deliver enlightening findings. But the indefinability of ‘art’, the subjective nature of ‘quality’ and the highly context-specific nature of the live arts experience, mean that unless the questions are phrased with great precision, and the findings interpreted with context in mind, the whole exercise will be at best meaningless and at worst lead to damaging actions based on misleading conclusions.

ACE could start by listening to NPOs. They rightly point out that a “bespoke, tailored approach” that aligns with their own artistic objectives would be most useful for them. It’s impossible to disagree. And with a £2.7m budget, it’s not an impossible dream.

In the meanwhile, NPOs required to sign up to Quality Metrics, just say no. Take a leaf out of Dorothy Wilson’s book, who told AP a few years ago that her board was: “well versed in striking out unreasonable contract clauses”. It’s always an option.

Join the Discussion

You must be logged in to post a comment.